Implementations of Monte Carlo and Temporal Difference learning.

In the previous post, I discussed two different learning methods for reinforcement learning, Monte Carlo learning and temporal difference learning. I then provided a unifying view by considering

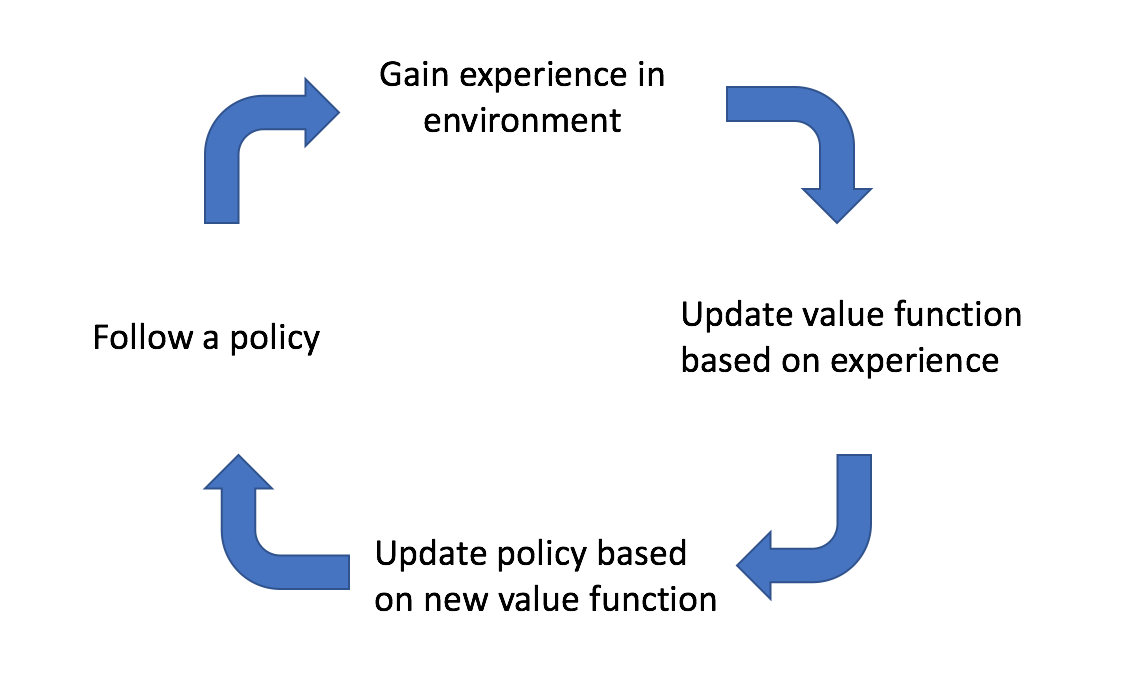

We start off by following an initial policy and explore the environment. As we observe the results of our actions, we can update our estimate of the true value function that describes the environment. We can then update our policy by querying the best actions to take according to our newly estimated value function. Ideally, this cycle continues until we've sufficiently explored the environment and have converged on an optimal policy.

In this post, I'll discuss specific implementations of the Monte Carlo and TD approach to learning. However, I first must introduce the concept of on-policy and off-policy learning. A method is considered on-policy if the agent follows the same policy which it is iteratively improving. However, there are cases where it would be more useful to have the agent follow a behavior policy while learning a target policy. Such methods, where the agent doesn't follow the same policy that it is learning, are considered off-policy.

Monte Carlo learning

The Monte Carlo approach approximates the value of a state-action pair by calculating the mean return from a collection of episodes.

GLIE Monte-Carlo

GLIE Monte-Carlo is an on-policy learning method that learns from complete episodes. For each state-action pair, we keep track of how many times the pair has been visited with a simple counter function.

For each episode, we can update our estimated value function using an incremental mean.

Here,

We'll adopt an

Temporal difference learning

The temporal difference approach approximates the value of a state-action pair by comparing estimates at two points in time (thus the name, temporal difference). The intuition behind this method is that we can formulate a better estimate for the value of a state-action pair after having observed some of the reward that our agent accumulates after having visited a state and performing a given action.

Sarsa

Sarsa is an on-policy

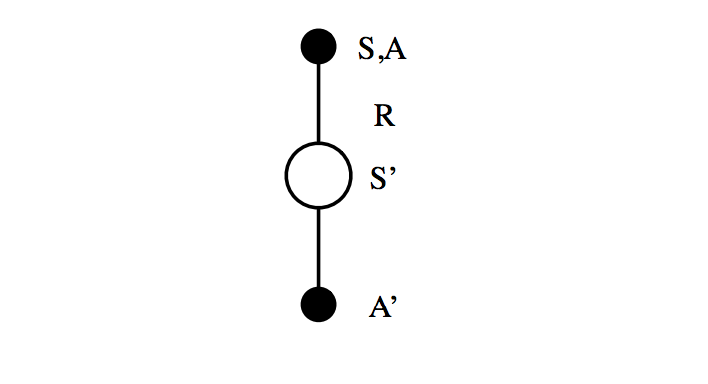

We start off in state

Photo by Richard Sutton

We'll define our policy as

The value function update equation may be written as

where we include one step of experience combined with a discounted estimate for future returns as our TD target.

Q-learning

Q-learning is an off-policy

We'll define our behavior policy, the policy that determines the agent's actions, as

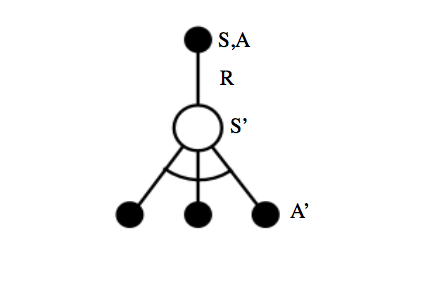

Rather than looking at a sequence of State-Action-Reward-State-Action, we instead take a sequence of State-Action-Reward-State and refer to our target policy for the subsequent action. Because our target policy is greedy, there is no stochastic behavior (

Photo by Richard Sutton

The value function update equation for Q-learning may be written as

where we similarly include one step of experience combined with a discounted estimate for future returns as our TD target; however, we've removed the exploratory behavior from our estimate for future returns in favor of selecting the optimal trajectory, increasing the accuracy of our TD target.

Hybrid approach to learning

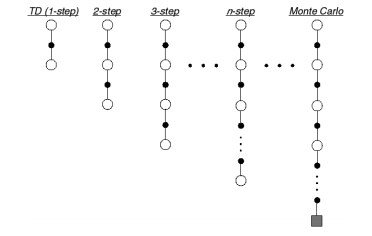

So far, we've discussed methods which seek a more informed valuation of a state-action pair by two main approaches, a one-step lookahead combined with a guess (the temporal difference approach) and a full sequence lookahead (the Monte Carlo approach). Let us now consider the spectrum of algorithms that lie between these two methods by expanding our Sarsa implementation to include

The general Sarsa algorithm considers the case of

At the other end of the spectrum, the Monte Carlo approach, which considers a full sequence of observed rewards until the agent reaches a terminal timestep

However, we could develop

Photo by Richard Sutton

The

The value function update equation may be written as

where

Sarsa

In the last post, I discussed a general method for combining the information from all of the

Using a weight of

The forward-view Sarsa

This method will, for each state-action pair, combine the returns from a one-step lookahead, two-step lookahead, ...,

However, we need to develop a backward-view algorithm in order to enable our agent to learn online during each episode. Otherwise, we'd need to wait until the agent reaches a terminal state to do the forward-view algorithm described above. To do this, we'll need to replace our

As covered in the last post, we'll initialize all eligibilities to zero, increment the eligibility of visited states, and decay all eligibilities at each time step.

Our value function is updated in proportion to both the TD error and the eligilibilty trace.

This method is an on-policy approach, meaning that next actions are selected according to the learned policy.