Logistic regression.

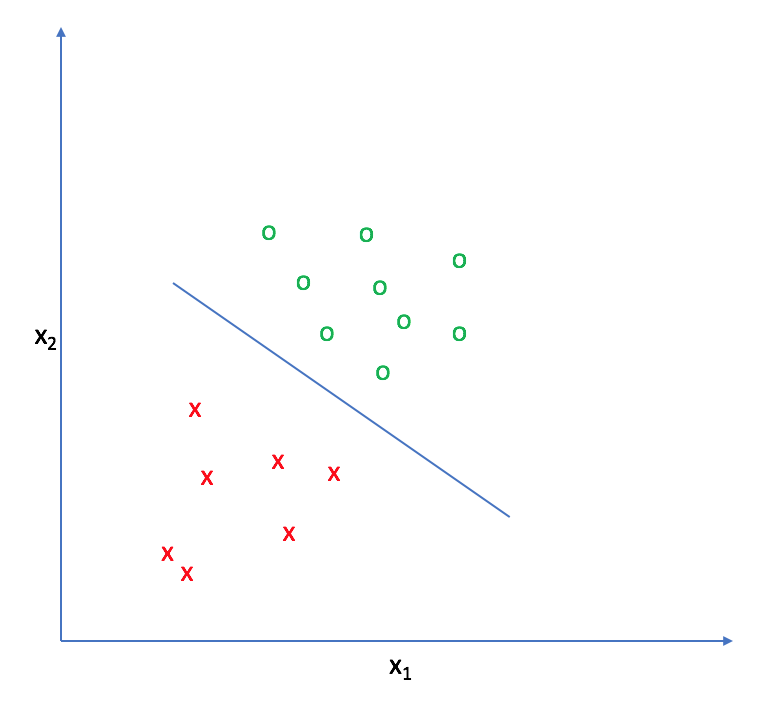

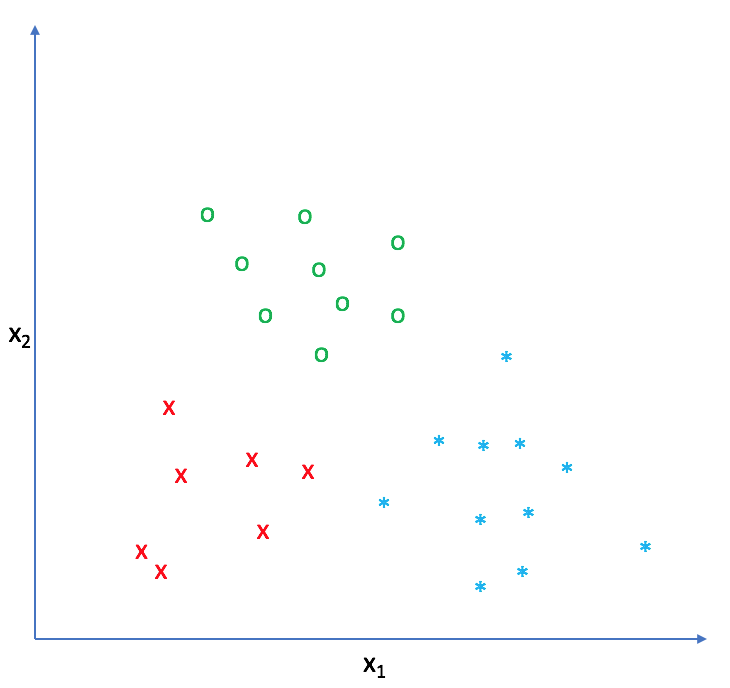

The goal of logistic regression, as with any classifier, is to figure out some way to split the data to allow for an accurate prediction of a given observation's class using the information present in the features. (For instance, if we were examining the Iris flower dataset, our classifier would figure out some method to split the data based on the following: sepal length, sepal width, petal length, petal width.) In the case of a generic two-dimensional example, the split might look something like this.

The decision boundary

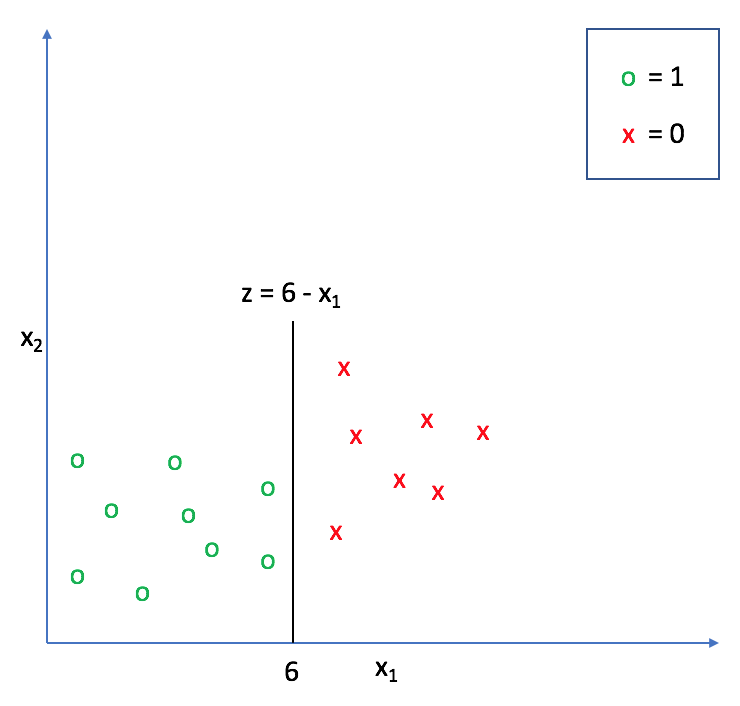

Let's suppose we define a line that is equal to zero along this decision boundary. This way, all of the points on one side of the line take on positive values and all of the points on the other side take on negative values.

For example, in the following graph,

We can extend this decision boundary representation as any linear model, with or without additional polynomial features. (If this model representation doesn't look familiar, check out my post on linear regression).

Adding polynomial features allows us to construct nonlinear splits in the data in order to draw a more accurate decision boundary.

As we continue, think of

The logistic function

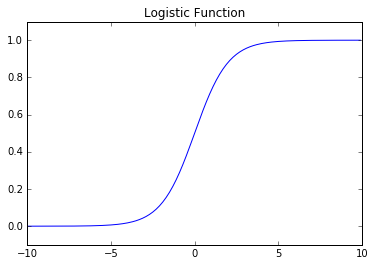

Next, let's take a look at the logistic function.

As you can see, this function is asymptotically bounded between 0 and 1. Further, for very positive inputs our output will be close to 1 and for very negative inputs our output will be close to 0. This will essentially allow us to translate the value we obtain from

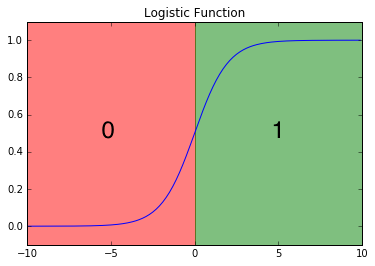

At the decision boundary

where

More concretely, we can write our model as:

Thus, the output of this function represents a binary prediction for the input observation's class.

Another way to interpret this output is to view it in terms of a probabilistic prediction of the true class. In other words,

Because the class can only take on values of 0 or 1, we can also write this in terms of the probability that

For example, if

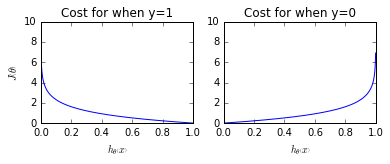

The cost function

Next, we need to establish a cost function which can grade how well our model is performing according to the training data. This cost function,

In linear regression, we used the squared error as our grading mechanism. Unfortunately for logistic regression, such a cost function produces a nonconvex space that is not ideal for optimization. There will exist many local optima on which our optimization algorithm might prematurely converge before finding the true minimum.

Using the Maximum Likelihood Estimator from statistics, we can obtain the following cost function which produces a convex space friendly for optimization. This function is known as the binary cross-entropy loss.

These cost functions return high costs for incorrect predictions.

More succinctly, we can write this as

since the second term will be zero when

Finding the best parameters

Gradient descent

As a reminder, the algorithm for gradient descent is provided below.

Taking the partial derivative of the cost function from the previous section, we find:

And thus our parameter update rule for gradient descent is:

Advanced optimizations

In practice, there are more advanced optimization techniques that perform much better than gradient descent including conjugate gradient, BFGS, and L-BFGS. These advanced numerical techniques are not within the current scope of this blog post.

In sklearn's implementation of logistic regression, you can define which optimization is used with the solver parameter.

Multiclass classification

While binary classification is useful, it'd be really nice to be able to train a classifier that can separate the data into more than two classes. For example, the Iris dataset I mentioned in the beginning of this post has three target classes: Iris Setosa, Iris Versicolour, and Iris Virginica. A simple binary classifier simply wouldn't work for this dataset!

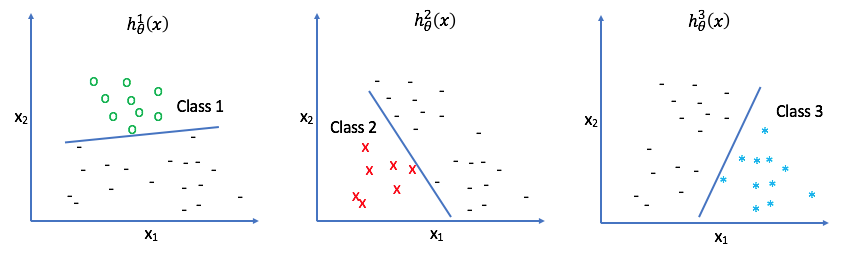

However, using a one-vs-all (also known as one-vs-rest) classifier we can use a combination of binary classifiers for multiclass classification. We simply look at each class, one by one, and treat all of the other classes as the same. In a sense, you look at "Class 1" and build a classifier that can predict "Class 1" or "Not Class 1", and then do the same for each class that exists in your dataset.

In other words, each classifier

Summary

Each example has an

Our hypothesis function predicts the likelihood that the example

where

In order to build a classifier capable of distinguishing between more than two classes, we simply build a collection of classifiers which each return the probability the observation belongs to a specific class.

For a dataset with